IBM Just Cracked Quantum Computing. Are You Ready?

Over four decades ago, physicist Richard Feynman imagined using quantum mechanics to solve problems impossible for classical machines. “Nature isn’t classical, dammit, and if you want to make a simulation of nature, you’d better make it quantum mechanical,” he stated in 1981.

This early vision laid the groundwork for quantum computing, harnessing quantum bits (qubits) that leverage weird properties like superposition and entanglement to process information in powerful new ways. Unlike a classical bit that’s either 0 or 1, a qubit can exist in superposition of 0 and 1 simultaneously, effectively encoding many possibilities at once.

Pairs of qubits can become entangled, their states mysteriously linked so that an operation on one instantly affects the other. These quantum principles enable massive parallelism: a sufficiently large quantum computer could, in theory, solve certain problems (drug molecule simulations, complex optimizations, cryptographic math) in minutes that would take classical supercomputers millions of years. Achieving such quantum advantage, useful tasks done faster or cheaper than on classical supercomputers, has been the holy grail for researchers and companies worldwide.

Yet progress has been gated by a fundamental challenge: qubits are extraordinarily fragile. The same quantum effects that give qubits their power also make them prone to errors from the slightest noise or disturbance. Vibrations, temperature fluctuations, or stray electromagnetic fields can knock qubits out of their delicate states.

For current “noisy” quantum devices, this means errors accumulate quickly, limiting how complex a computation they can reliably run. So, while prototypes with 50–100 qubits exist, they’re called NISQ devices (Noisy Intermediate-Scale Quantum), useful for research, but not yet outperforming classical computers on practical tasks.

Quantum error correction has therefore become the bottleneck: how to detect and fix qubit errors on the fly, so that a quantum computation can run longer and deeper than the raw hardware’s noise would otherwise allow. This is the critical missing piece to move from today’s proof-of-concept quantum processors to tomorrow’s workhorse engines that deliver real-world value.

The Quest for Fault Tolerance

The solution, in theory, is to combine many unstable qubits into one very stable logical qubit through clever encoding, much like combining multiple unreliable components can yield a highly reliable system. In practice, however, early schemes required exorbitant overhead. For example, the popular “surface code” approach might need on the order of 1,000 or more physical qubits to encode one logical qubit with sufficiently low error rates. This overhead is so large that until recently no one had demonstrated a logical qubit that actually improved on physical qubits.

The field has been stuck at the threshold of fault tolerance: the point at which each added layer of error correction yields diminishing errors, enabling a machine to scale up without being swallowed by noise. Reaching fault tolerance means a quantum computer’s logical qubits stay error-corrected and stable indefinitely, allowing millions or billions of operations, essentially making the device behave like an error-free “ideal” quantum computer of any size.

Achieving fault tolerance has proven incredibly challenging. Researchers at Google only recently showed a first hint that adding more qubits in an error-correcting code can reduce the error rate (rather than increase it), a milestone result using a 72-qubit superconducting chip named Willow.

Google reported that on Willow, logical qubits based on the surface code got exponentially more reliable as they scaled up the code size, “cracking a key challenge in quantum error correction that the field has pursued for almost 30 years”. It was a crucial proof-of-concept that quantum error correction can work. Still, surface codes remain resource-hungry; building a full fault-tolerant machine with them might require a million or more physical qubits and extremely fast classical control systems, a grand engineering challenge likely spanning many years.

IBM’s Breakthrough: Solving Fault Tolerance with LDPC Codes

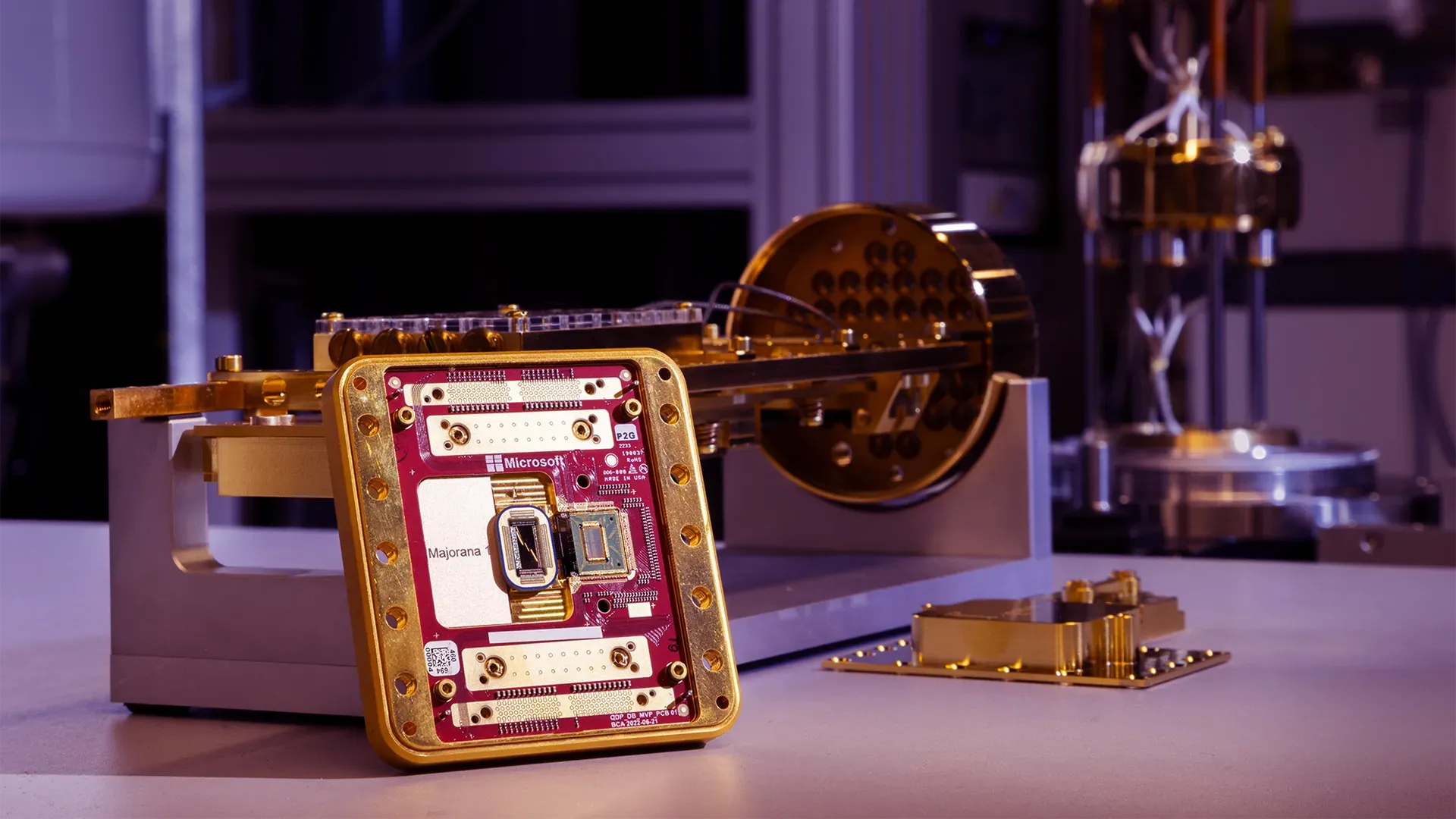

Enter IBM’s new approach. IBM has declared that it has “solved the science” behind fault-tolerant quantum computing and is now moving to the engineering phase . This bold claim comes on the heels of IBM’s June 2025 announcement of IBM Quantum Starling, slated to be the world’s first large-scale fault-tolerant quantum computer by 2029.

IBM’s confidence stems from breakthrough research in error correcting codes and architectures. As IBM CEO Arvind Krishna put it, “IBM is charting the next frontier in quantum computing… our expertise across mathematics, physics, and engineering is paving the way for a large-scale, fault-tolerant quantum computer – one that will solve real-world challenges and unlock immense possibilities for business”. In other words, IBM believes it has finally assembled all the scientific pieces required to tame quantum errors at scale.

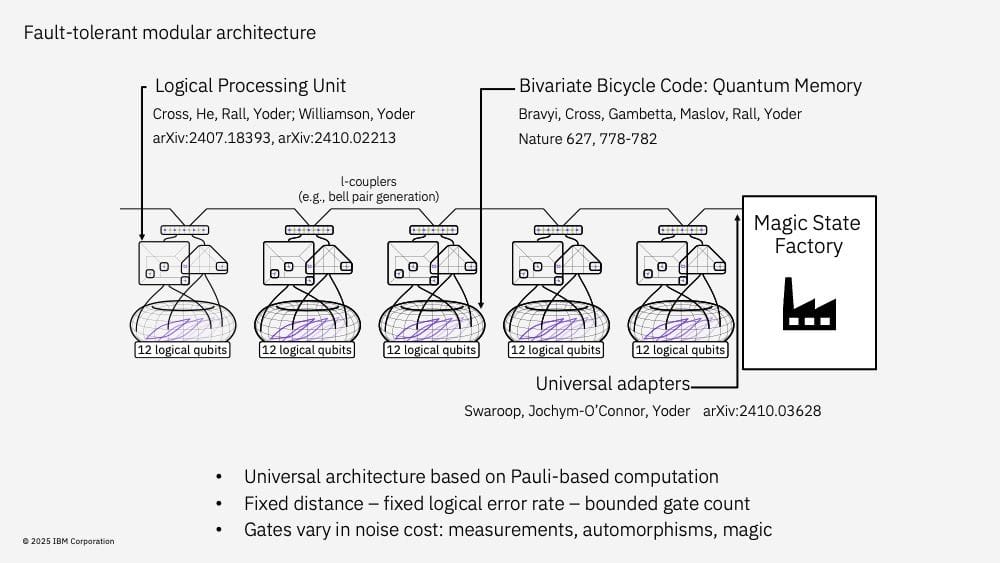

At the heart of IBM’s breakthrough lies the advanced error-correcting technology known as quantum Low-Density Parity-Check (LDPC) code, specifically, Bivariate Bicycle (BB) codes. These codes significantly reduce the number of physical qubits needed to ensure reliable, fault-tolerant quantum computations, making practical quantum computing much closer to reality. Unlike older methods requiring thousands of qubits for each error-protected "logical" qubit, IBM’s new approach uses only about one-tenth of those resources, drastically improving scalability and performance.

To implement these codes physically, IBM redesigned their quantum chip architecture using advanced connectivity called "C-couplers," allowing qubits to interact more flexibly and maintain accuracy even at longer distances. Early tests indicate this design achieves excellent reliability, critical for large-scale quantum machines.

IBM’s solution is modular, meaning instead of one huge quantum processor, many smaller, independently fault-tolerant modules link together. Each module contains error-corrected qubits along with logical processing units (LPUs) that enable complete quantum operations.

This approach, combined with innovative "L-couplers," allows qubits across modules to seamlessly connect, scaling quantum capabilities efficiently. IBM’s planned sequence of chips—Quantum Loon (2025), Quantum Kookaburra (2026), Quantum Cockatoo (2027)—will culminate in IBM Quantum Starling by 2029, a machine capable of 200 logical qubits and 100 million quantum operations, exponentially surpassing current quantum technologies.

What Starling’s Success Could Mean for Business

For business leaders, this means practical quantum computing isn’t decades away, it’s becoming achievable within this decade. Organizations should prepare now, exploring applications in pharmaceuticals, finance, logistics, and more, to ensure they leverage quantum computing’s transformative potential as soon as it arrives.

If IBM delivers a working 200-logical-qubit machine by 2029 that can reliably run 100 million-gate quantum circuits, the computational capabilities unleashed would be unprecedented. Tasks that are practically impossible today could become routine.

For example, a fault-tolerant quantum computer with hundreds of logical qubits could simulate complex molecules and chemical reactions exactly, accelerating drug discovery and materials science research. In healthcare and pharmaceuticals, this might lead to designing new medications or vaccines in a fraction of the time. In finance, quantum algorithms could more efficiently optimize large investment portfolios, perform risk analysis, or crack complex derivatives pricing problems, potentially giving an edge in markets or enabling new financial products.

Logistics and supply chain management could see quantum computers tackling enormous optimization problems (like globally routing deliveries or scheduling production) far faster, leading to cost savings and efficiency gains that compound across industries. Machine learning and AI might also benefit, as certain quantum algorithms could handle high-dimensional data or complex models beyond classical capacity.

It’s important to note that achieving quantum advantage for real-world use cases may come even sooner, around 2026 according to IBM. These early advantage scenarios will likely involve a hybrid approach, using quantum processors as accelerators for specific parts of a workflow in chemistry, optimization, or machine learning, while the rest runs on classical HPC systems.

IBM is confident that by 2026 its quantum systems (like the upcoming 120-qubit Nighthawk processor) will solve some problems more efficiently than classical computers alone. Those initial advantages will be niche, but they’re crucial stepping stones. Companies that start experimenting with quantum algorithms now will be better positioned to exploit those breakthroughs when they happen. In contrast, waiting until a fully fault-tolerant machine arrives in 2029 could mean falling behind competitors who have spent years developing quantum-ready applications.

What Should Business Leaders Do?

So what should business leaders do today? Actionable steps include:

- Educate and Upskill: Develop a basic understanding of quantum computing’s principles and potential impact in your industry. Build a team (even a small one) of quantum-aware talent. Many companies are appointing “quantum ambassadors” or task forces within their R&D groups to track developments.

- Experiment and Partner: Use the quantum cloud services already available (IBM Quantum, Google Quantum AI, Amazon Braket, Microsoft Azure Quantum, etc.) to run prototype experiments. Even with today’s noisy devices, you can explore algorithms for optimization, machine learning, or chemistry on small scales.

- Identify Use Cases: Analyze your business’s most complex computational problems. Which challenges might be intractable for classical systems but potentially solvable with quantum? Examples might be massive combinatorial optimizations (scheduling, routing, portfolio construction), large-scale simulations (for new materials or drugs), or advanced cryptography and data security tasks. Prioritize a few high-impact use cases and monitor how quantum algorithms for those tasks progress.

- Invest in Quantum-Ready Infrastructure: As we approach fault-tolerant machines, think about the integration. Quantum computers will likely work alongside classical supercomputers. Ensure your IT infrastructure and partnerships can accommodate that (for instance, IBM’s vision involves quantum integrated with classical HPC in the cloud ). Also plan for post-quantum cryptography: even as quantum promises solutions, it also threatens current encryption, a separate but related area where planning ahead is wise for financial and sensitive-data firms.

In short, leaders should treat quantum as an emerging disruptive technology on the horizon of the enterprise. Much as AI went from academic labs to transforming industries in a decade, quantum computing is on a similar trajectory. It’s not a question of “if,” but “when” and “who” will harness it first.

IBM’s Starling announcement signals that the timeline for practical quantum computing is becoming clearer and closer. As Arvind Krishna highlighted, this next tier of computing “will solve real-world challenges and unlock immense possibilities for business”. The prudent strategy is to prepare now: develop a quantum strategy, start small pilots, and remain agile as the technology matures.

Conclusion

The message to the C-suite is clear: The science of quantum computing is largely solved; the engineering is well underway, and the applications are on the horizon.

IBM’s plan to build the first fault-tolerant quantum computer by 2029 is audacious, but it seems backed, though not yet peer-reviewed, by tangible scientific advances, from new error-correcting codes to fast decoders and modular architectures. If successful, Starling will mark the dawn of a new era in computing, one where quantum computers can tackle problems of staggering complexity with relative ease.

The ripple effects across industries could be profound, ushering in breakthroughs in medicine, finance, logistics, and beyond. Businesses that have been following quantum computing’s evolution are already gearing up for this shift. Those that haven’t need to start catching up, the next few years are a critical window to get quantum-ready.