Why We Should Ban Lethal Autonomous Weapons

A few days ago, some of the world’s most renowned entrepreneurs, engineers and scientists in the technology industry warned for the dangers of artificial intelligence and that of lethal autonomous weapons. Over 150 companies and 2400 individuals from 90 countries signed the lethal autonomous weapon pledge at the 2018 International Joint Conference on Artificial Intelligence. I signed it as well, as I believe that we should do whatever it takes to stop lethal autonomous weapons as they can seriously destabilise the global society.

Already, artificial intelligence is becoming increasingly advanced, and it is poised to play a major role in every industry; not only the military industry but every industry. With that, it will become a central component in our lives, affecting most of the decisions that we take on a daily basis. When that happens, it is vital that we remain in control of artificial intelligence, that biased data does not take over and that we prevent artificial intelligence from becoming black boxes that we no longer understand.

Unexpected AI Behaviour

Researchers have already experienced behaviour in AI-only networks that became difficult to control and understand. For example, algorithms that started competing against each other and used novel tactics, algorithms that developed their own, new, encryption methods or algorithms that created their own secret language, unsolicited and that could not be understood by the developers of the algorithm. In each case, the algorithm acted differently than intended and showed unexpected behaviour. Imagine what happens when AI linked to weapons will start to behave unexpectedly.

The challenge is to understand how we can create Artificial Intelligent agents that behave as planned and that we can understand completely. Artificial intelligence, after all, has fundamentally different motivations than humans have. While humans are often driven by status, sex and money, AI is logical and probabilistically driven. Artificial intelligence’s only objective is to complete or maximise its ultimate goal. If engineers do not develop AI correctly, it will try to achieve its goal regardless of human norms, values and ethics. Hence, it is important that we prevent AI from harming humans autonomously because it could lead to completely different outcomes than expected. Outcomes that could be seriously destabilising for societies and individuals.

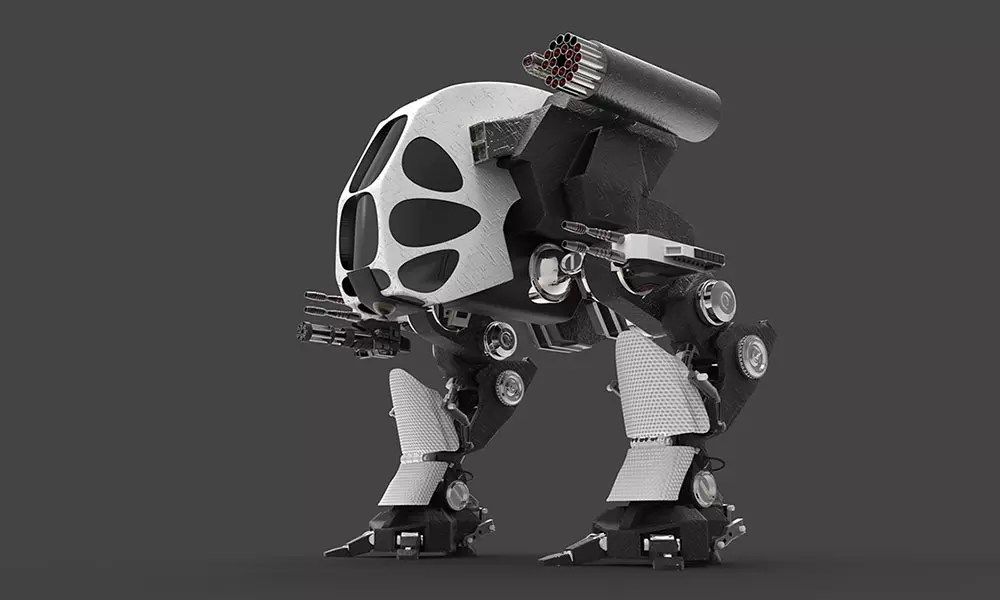

Slaughterbots

Therefore, the best approach would be to ensure that lethal autonomous weapons are a no-go area for every developer, researcher, organisation or government. If we do not prevent AI from harming humans and do not control and/or understand how AI behaves in different situations, AI can easily turn against humans as shown in the below, disturbing, Slaughterbots video:

As the video shows, artificial intelligence offers different risks than any other known existential risk humans faced before, such as nuclear war. As such, it requires a fundamentally different approach and a collective global response. The fact that leading organisations, engineers and scientists call for a ban on lethal autonomous weapons is a good step in the right direction. If governments around the globe do not act accordingly, a lethal AI arms race could easily follow and add to the existing problems in the world.

However, just banning lethal autonomous weapons is not enough. To control AI, we also need to become better at understanding advanced artificial intelligence, its objectives and its motivations. This field of research is called Explainable AI and was originally started in the military, with the objective to understand why a certain decision was made. The more sophisticated AI becomes, the less obvious its behaviour becomes to the developer or user of AI, and the more control is lost to AI.

Explainable AI can help to remain in control when AI becomes more advanced. However, it is very challenging to achieve sophisticated Explainable AI as it basically means AI debugging its own code, in plain language. Therefore, a pledge to call upon governments and government leaders to create a future with strong international norms, regulations and laws against lethal autonomous weapons is a good first step in the right direction, as developing sophisticated explainable AI is still far away. Therefore, if you agree with the above and also want to ban lethal autonomous weapons than also sign the pledge here.

Image: Josh McCann/Shutterstock