Killer Robots: Progress or Pandora’s Box?

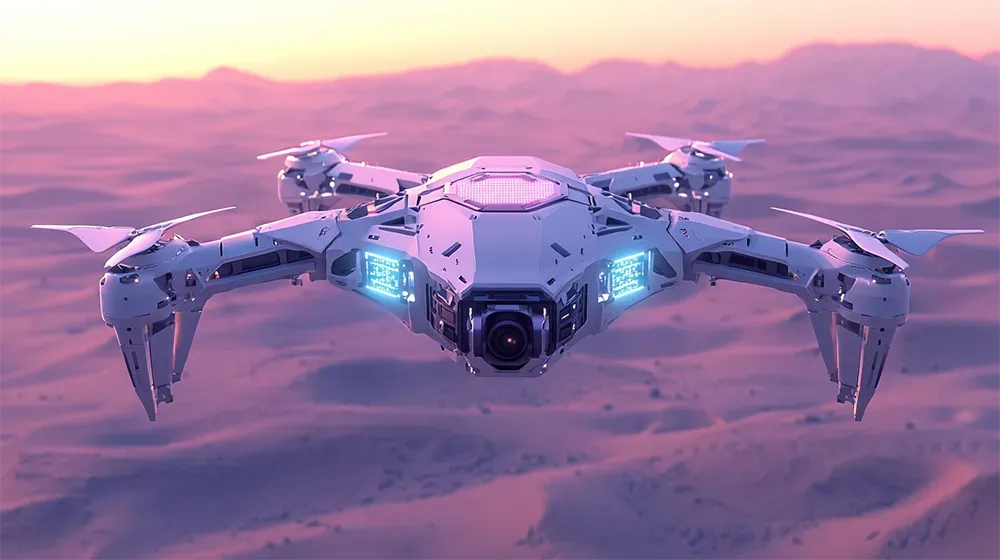

What happens when machines gain the power to decide life and death? The future of warfare is knocking, and it sounds like a drone.

The rise of autonomous weapons systems, like Fortem Technologies’ DroneHunter, marks a pivotal moment in military technology. These semi-autonomous drones detect and neutralize airborne threats using AI, with a human operator remaining "in the loop."

However, as global conflicts intensify, the push for fully autonomous weapons grows. While advocates argue these technologies could save soldiers' lives and reduce casualties, critics highlight risks such as escalation from algorithmic errors or misuse by rogue actors.

- U.S. programs like Replicator aim to mass-deploy uncrewed systems by 2025.

- Advocacy groups push for treaties regulating autonomous weapons, citing ethical and accountability concerns.

- Current AI limitations risk misidentifying targets, exacerbating civilian casualties.

This technological race forces us to ask: Can machines handle the moral weight of warfare, or are we automating the next arms race? Should machines ever hold the power of life and death? Share your thoughts below.

Read the full article on Undark.

----

💡 We're entering a world where intelligence is synthetic, reality is augmented, and the rules are being rewritten in front of our eyes.

Staying up-to-date in a fast-changing world is vital. That is why I have launched Futurwise; a personalized AI platform that transforms information chaos into strategic clarity. With one click, users can bookmark and summarize any article, report, or video in seconds, tailored to their tone, interests, and language. Visit Futurwise.com to get started for free!