When AI Gets It Wrong: Why Its Mistakes Are Stranger Than Ours

AI doesn’t just make mistakes, it makes bizarre, confidence-filled blunders that no human would ever dream of.

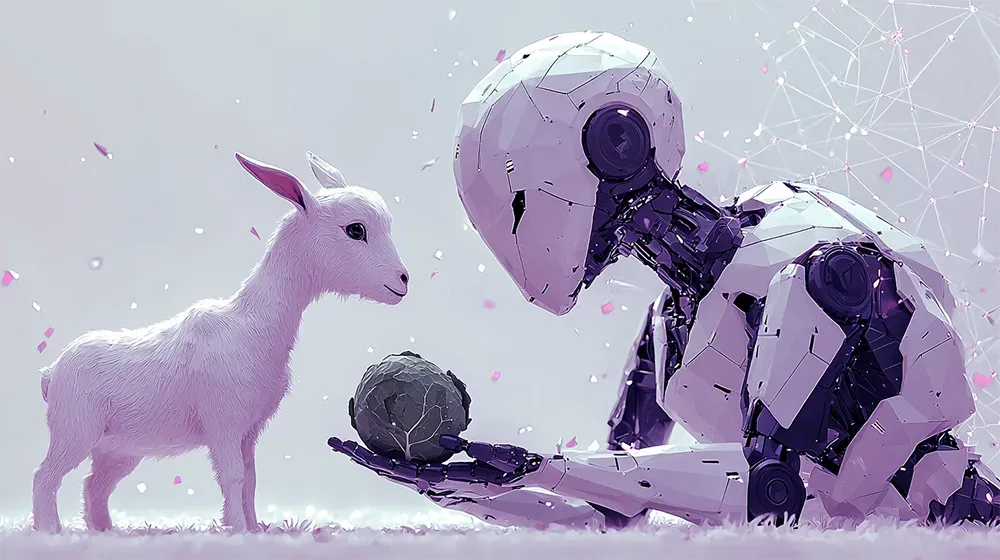

AI systems, like large language models (LLMs), make mistakes that defy human logic. Unlike us, their errors don’t cluster around areas of weakness, nor are they accompanied by uncertainty. AI might excel at calculus while confidently suggesting that cabbages eat goats.

This unpredictability complicates trust, especially in high-stakes scenarios. To address this, researchers propose two paths: teach AI to make human-like mistakes or build systems that adapt to its odd errors.

Methods like reinforcement learning with human feedback have shown promise, but new tools like iterative questioning are uniquely suited to AI’s quirks. If we can’t predict AI’s mistakes, how do we safely integrate it into critical decision-making?

Read the full article on IEEE Spectrum.

----

💡 We're entering a world where intelligence is synthetic, reality is augmented, and the rules are being rewritten in front of our eyes.

Staying up-to-date in a fast-changing world is vital. That is why I have launched Futurwise; a personalized AI platform that transforms information chaos into strategic clarity. With one click, users can bookmark and summarize any article, report, or video in seconds, tailored to their tone, interests, and language. Visit Futurwise.com to get started for free!